First it was an unclaimed bottle of water, now a Blackberry PDA left behind by a passenger.

Flights in the US are being diverted for both reasons. Yesterday a United Airlines flight to California from Atlanta was diverted to Dallas after a Blackberry was found on board. A spokesman for SFO airport said the plane was searched and nothing unusual was found. It was just a precaution.

I wonder by what calculus does a Blackberry constitute an actionable threat? How do you get to that point where it seems reasonable to respond by diverting a jet plane? When you have had fear instilled, you're afraid of ordinary events.

One of my memories of the days following 9/11 is of a news story of a woman who worked in a cafe in Georgia who called the authorities after two men "looked at her" suspiciously, and were dark of skin. After the men had been tracked down and detained it turned out they were med students on their way to a conference in Florida. Yes, that's right, they were our future doctors (they didn't even recall the woman).

What tools or avenues of understanding do we have that could account for our situation? What would happen if we assembled our best minds and asked them to explain risk, threat and fear? While I'm no expert, it seems to me that there are three possible categories of explanation:

1. The political explanation. In John Dean's new book, Conservatives without Conscience, he argues that fear is constructed or manufactured by politicians seeking to strengthen their power. (Dean you may recall was in the Nixon administration.) For Dean this is particularly true of conservatives who have displayed alarming tendencies over the past 15 years toward an authoritarian policy and way of life. Dean writes:

Frightening Americans...has become a standard ploy for Bush, Cheney, and their surrogates.

They add a fear factor to every course of action they pursue, whether it is their radical foreign policy of preemptive war, their call for tax cuts, their desire to privatize social security, or their implementation of a radical new health care scheme. This fearmongering began with the administration's political exploitation of the 9/11 tragedy, when it made the fight against terrorists the centerpiece of its presidency (p. 172).

Former vice-President Al Gore has made similar remarks in a keynote speech he gave in 2004 (printed in Social Research, vol. 71(4), 779-793). Noting that terrorism has the aim of instilling fear, Gore addressed the question of whether the Bush administration has deliberately leveraged that fear for political gain--accusing Max Cleland, who lost three limbs in Vietnam, of being unpatriotic, for example, and of diverting attention away from a poorly performing economy (and government).

Inasmuch as politics is a discourse, if this is where our fear comes from, then it can be resisted and countered, both by those at the everyday level (found a Blackberry? well, losers weepers, finders keepers), and by those with a pulpit. Keith Olbermann of MSNBC yesterday had sharp words for this administration's politicizing of 9/11:

Terrorists did not come and steal our newly-regained sense of being American first, and political, fiftieth. Nor did the Democrats. Nor did the media. Nor did the people.The President -- and those around him -- did that.

Similarly, Dan Froomkin, writing in the Washington Post--again, on 9/11--observed:

What's also telling, as usual, is what Bush didn't say yesterday, and doesn't say, period.

He doesn't say we won't allow ourselves to be terrorized, and we won't be afraid. (That would run counter to the central Republican game plan for the mid-term election.) He doesn't say that in our zeal to fight the terrorists, we won't give up the qualities that make America great. He acknowledges no mistakes, he calls for no sacrifice, he refuses to reach out to those who disagree with him.

2. The cognitive/statistical/evolutionary explanation. Perhaps we have evolved a cognitive system that is terribly bad at assessing risk and threat, at least in terms of judging relative uncertainties. In experiments conducted during the 1970s and 80s, the psychologists Daniel Kahneman and Amos Tversky showed that people are pretty bad at making judgements where probability is involved.

Unfortunately this covers a lot of ground, and includes scientific judgements of statistical likelihood as well.

Kahneman and Tversky showed, for example, that people tend to ignore or be unaware of the prior probability or "base rate" likelihood of something occuring. Let's say we are given the information: "Steve is very shy and withdrawn, helpful, but not very interested in people." Is he more likely to be a farmer, a librarian, an airline pilot, a salesman, or physician?

The fact that there are many more farmers than librarians (say; K&T provided base-rates in their empirical studies) enters into the probability of which job he has. But people don't think like that. They construct a cognitive "frame" or scenario in which the qualities given are adduced to be more "librarian-like" than "farmer-like."

Similarly, today it was announced that the CEO of Bristol-Myers Squibb would step down. Apparently the Justice Department is moving to investigate whether the company prevented a generic equivalent of one of its big money-makers from coming on to the market. In two separate news reports on this story it was also pointed out that the CEOs of Merck and Pfizer had also stepped down or been forced out in the last couple of years. In one story these developments were used to conclude that big pharma is currently in trouble.

But is that a justifiable conclusion? Not until we know the base rate of CEO turnover. Also reported today for instance was the story that HP is losing its CEO.

Another thing we tend to do is misunderstand chance. In coin tosses of a fair coin, which is more likely:

a). H-T-H-T-T-H

b). H-H-H-T-T-T

c). H-H-H-H-T-H?

Most people say a). is more likely than b). is more likely than c). In fact, because these are small runs (sample sizes) they are all equally likely. (A related phenomenon is the gamber's fallacy.)

It can also be shown that we assess likelihood or risk of something by how easily it is to bring instances of it to mind. So if you know of someone who died of heart failure, you are more likely to elevate the risk of heart failure more generally. In like manner, if you can bring to mind a terrorist attack, you are more likely to accept that terrorist attacks are likely.

Another bias in thinking occurs when we are given an initial number or attribute and we get fixated on this number even when we are allowed or encouraged to change it. So in an experiment where people had to judge the percentage of African nations in the United Nations, for example, a random number was generated with a roulette wheel. People were then asked to say if this number was too high or low, and allowed to change it. People for whom the roulette wheel had assigned a high number also resulted in a high estimate of the percentage. And vice versa. The initial number "sticks" even when we reject it as wrong and can change it.

These findings indicate that for a lot of the time people think heuristically and with "biases." This can be the case for trained statisticians as well as lay-people. For example, in a 2000 study, people recognized and accepted that the estate tax, if rescinded, would benefit only the top 1% of earners in this country. However, 39% of them thought that they were already in the top 1% or would be there "soon" (source)!In summary, people seem to make judgements based on heuristics that capture an overall "frame" of the problem, rather than strict statistical probabilities. If the predominant frame is changed, their judgements could perhaps also be changed.3.

Radical-structuralist explanation. In this explanation deep-seated structural changes are identified as the causes of risk, threat and fear. For example, the ever-present need for capital to colonize new domains in order to make higher profits (just maintaining productivity is insufficient, profits must actually always grow--nobody wants stagflation). There is a fear that the money will no longer be coming in as fast as it needs to. This leads to a general nervousness which is reflected in the stock market. Notice how performance is actually less about production anymore, but about the finances--the financialization of the economy (stock value for instance, see Enron).

However, a stronger explanation, related to the first explanation (that fear is useful to sustain political advantage for the right) is that concentrating on threat and dangerousness is easier for government to operate. This idea, developed by the French thinker Michel

Foucault and colleagues in the 1970s, is part of a political philosophy that is sometimes called "governmentality." In this case however, fear is not the exclusive strategy of conservatives but rather is inbuilt into the modern (neo)liberal state.

A related idea was suggested by Ulrich Beck in the early 1990s in his book Risk Society. Beck argues that modernity itself is one dominated by a philosophy of risk. If risk is threat, then we need a bevy of experts, preferably technical experts, to tell us about it (a point also made by Foucault).

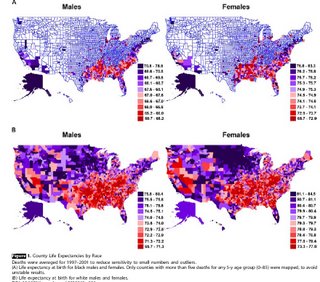

A key component of this philosophy (adopted at various times according to Foucault, but increasingly from the 17th century onwards, so that it is actually a constitutive part of modern societies--take insurance for example) is that instead of dealing with subjects -- individuals -- government now dealt with groups or more accurately with "populations."

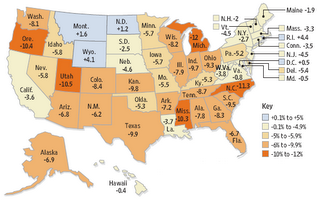

Along with this therefore came a suite of tools and techniques (including mapping) that dealt with the population. Statistics, for one (invented in the 19th century for reasons of political economy, I paraphrase a bit). The thematic map, for another. And surveillance for yet another. Birth rates, death rates, age of marriage, number of children (collectively known as biopolitics) -- all of these became of interest in order to gain the

security of the state.

Now the state could look at groups and assign them a risk factor (eg., Muslims and mosques, which the FBI looked at after 9/11). If you were a member of a group your dangerousness could be assessed. Note that this reverses the assumed practice of the criminal justice system. We assume that we are of interest to the criminal justice system to the extent that we have committed a crime, that is, we come of interest

after wrongdoing. That is, we are marked while guilty.

In the dangerousness/risk mode of government however, we become of interest before any wrongdoing. That is, we are marked while innocent. This is the same practice as stereotyping and profiling (racial profiling for example).

Of course a lot of people reject profiling but there is no doubt that it is used in many areas of our lives, from geographical profiling (

market segmentation companies for example, committing the ecological fallacy with every zipcode lookup!) to our .insurance rates -- not based on our personal attributes but the group (zipcode or age group or whatever) that we "belong" to, to the police pulling over drivers of a certain racial profile on the New Jersey turnpike ("DWB").

* * *

These three explanations of our current climate of fear, risk and threat are not necessarily mutually exclusive. For all I know they could all three be working. If they are at all relevant, I hope that they can provide us with insight into changing the current climate of fear. Unfortunately they don't come with instructions on how to do that.